|

||||

|

|

|||

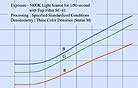

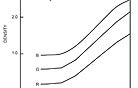

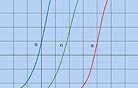

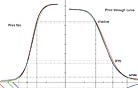

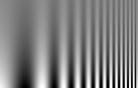

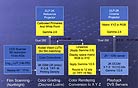

Negative film is not tasked with having to display the recorded image. To do so, the negative must be directly printed onto positive print film or, for releasing large numbers of prints, go through the intermediate photochemical processes of creating an interpositive (IP) and then mulitple internegatives (INs). A check print is made from the IN, and then the images found on the original camera negative finally can be displayed. Print film does not reproduce everything that was recorded on the negative. “The purpose of the negative is to capture a virtual scene,” says Kodak image scientist Douglas Walker, “but there is no practical way to reproduce those same luminance ratios. A scene can have a dynamic range of 100,000:1 or 1,000,000:1, and it would be cost-prohibitive to try to reproduce that in every theater. So you need a way of creating a rendition of the scene that is convincing, yet more practical to re-create. The purpose of print film is to do just that.” The sensitometric curve of print film is much steeper than that of negative film. Just compare gammas: .6 for negative vs. 2.6 or more for print. As a result, highlights and shadows compress in the toe and shoulder of the print stock, respectively (see diagram a, diagram b and diagram c). “This is kind of the ‘film look,’” says Technology Committee chair Curtis Clark, ASC, “which still has gradation in the shoulder and toe, whereas with video it just clips. That’s something I think we have grown accustomed to culturally and aesthetically as well — having that ability to see particularly in the highlights and shadows because there are vital nuances and details there.” Indeed, the cinematographer can control how much the audience sees into the highlights and shadows by increasing or decreasing print density via printer lights. In one of the eccentricities of the photochemical chain, print film’s sensitometric curve is the inverse of the negative curve, where the shoulder contains shadows instead of highlights. To visualize the relationship of the two curves, you place the print curve on top of the negative curve and rotate the print curve 90 degrees. (This is known as a Jones diagram, named after its inventor, Lloyd Jones.) By adjusting a printer light during print film exposure, say green by +3 points, the negative’s curve slides by a fixed amount of +3x0.025 log exposure along the print curve, modulating the amount of light that hits the print film. The print film’s curve remains fixed. The vertical-density axis of the negative maps onto the horizontal log-exposure axis of the print. It is because of this that printer light changes are quantifiable and repeatable. The June 1931 issue of American Cinematographer contains a one-paragraph blurb about a new natural-color motion-picture process shown to England’s premier scientific body, the Royal Society of London, the same society that witnessed James Maxwell’s exhibition of the first color photograph. This motion-picture process used a film base imprinted with a matrix consisting of a half-million minute red, green and blue squares per inch of film. That sounds like a “pixellated” concept — about 40 years ahead of its time. An image can be scanned and mathematically encoded into any number of lines both horizontally and vertically, and this is known as spatial resolution. Typically, there are more lines of resolution horizontally than vertically because a frame is wider than it is tall, meaning more information is found in a scan line from side to side than one from top to bottom. Each line is made up of individual pixels, and each pixel contains one red, one green and one blue component. Based on the encoded values for that pixel, the components will dictate what color the pixel will be along with the characteristics of the specific display device. Scanning at 2K resolution has been the most popular and feasible. A true 2K frame is 2048x1556x4 or 12,746,752 bytes in file size. (It is x4 because the three Cineon 10-bit RGB components that equal 30 bits are packed into 32 bits, which is 4 bytes. Two bits are wasted.) 4K 4096x3112 is fast becoming viable as storage costs drop and processing and transport speeds escalate. Spider-Man 2 (AC July ’04) was the first feature to undergo a 4K scan and 4K finish. Bill Pope, ASC screened for director Sam Raimi, the editors and the producers a series of 2K and 4K resolution tests, and all preferred the 4K input/output. The perceived improvement in resolution is that obvious. Beginning with 6K, that perception starts to wane for some people. There are scanners on the market that can scan a frame of film at 10K resolution. But scanning an entire movie at 10K right now is as rapid as using the Pony Express to send your mail. The ideal scanning resolution is still a topic for debate, though 8K is favored by many. “In order to achieve a limiting resolution digitally of what film is capable at aspect ratio, you really need to scan the full frame 8000 by 6000 to get a satisfactory aliasing ratio of about 10 percent,” says Research Fellow Roger Morton, who recently retired from Kodak. Limiting resolution is the finest detail that can be observed when a display system is given a full-modulation input. (This deals with the concept of modulation transfer function – I’ll get to that shortly.) Aliasing is the bane of digital. In the May/June 2003 issue of SMPTE Motion Imaging Journal, Morton identified 11 types of visible aliasing that he labeled A through K. They include artifacts such as: variations in line width and position; fluctuations in luminance along dark and light lines that create basket-weave patterns; coloration due to differences in response of color channels (as in chroma sub-sampling); and high-frequency image noise (also known as mosquito noise). “They’re more serious in motion pictures than in still pictures” he points out, “because many of the aliasing artifacts show up as patterns that move at a different speed than the rest of the image,” he points out. The eye is sensitive to motion and will pick that motion up.” Scanning (digitization) turns an undulating analog frequency wave into staircases. Converting the digital wave back to an analog wave does not necessarily take the smoother diagonal route between two sample points along the wave; it often moves in a very calculating manner horizontally and then up to the next point on the wave. It’s like terracing the rolling hillsides, but the earth that once occupied the now-level terraces has been tossed aside, and so has the image information contained between the two samples. Missing information can lead to artifacts, but the higher the sample rate, the closer together the sample points will be, thereby throwing out less information. The result is a smoother wave that can be reconstructed from its digital file. Deficiencies in the encoding/digitization process, including low resolution and undersampling, are the root of these distracting digital problems. Sampling at a higher rate alleviates this. A 2K image is a 2K image is a 2K image, right? Depends. One 2K image may appear better in quality than another 2K image. For instance, you have a 1.85 frame scanned on a Spirit DataCine at its supposed 2K resolution. What the DataCine really does is scan 1714 pixels across the Academy frame (1920 from perf to perf), then digitally up-res it to 1828 pixels, which is the Cineon Academy camera aperture width (or 2048 if scanning from perf to perf, including soundtrack area). The bad news is, you started out with somewhat less image information, only 1714 pixels, and ignored 114 useful image pixels, instead re-creating them to flesh the resolution out to 1828. You know how re-creations, though similar, are not the real thing? That applies here. Scan that same frame on another scanner with the capability of scanning at 1828, 1920 or even 2048 pixels across the Academy aperture, and you would have a digital image with more initial information to work with. Now take that same frame and scan it on a new 4K Spirit at 4K resolution, 3656x2664 over Academy aperture, then downsize to an 1828x1332 2K file. Sure, the end resolution of the 4K-originated file is the same as the 2K-originated file, but the image from 4K origination looks better to the discerning eye. The 4096x3112 resolution file contains a tremendous amount of extra image information from which to downsample to 1828x1332. That has the same effect as oversampling does in audio. In 1927, Harry Nyquist, Ph.D., a Swedish immigrant working for AT&T, determined that an analog signal should be sampled at twice the frequency of its highest frequency component at regular intervals over time to create an adequate representation of that signal in a digital form. The minimum sample frequency needed to reconstruct the original signal is called the Nyquist frequency. Failure to heed this theory and littering your image with artifacts is known as the Nyquist annoyance – it comes with a pink slip. The problem with Nyquist sampling is that it requires perfect reconstruction of the digital information back to analog to avoid artifacts. Because real display devices are not capable of this, the wave must be sampled at well above the Nyquist limit – oversampling – in order to minimize artifacts. |

|

|||

|

<< previous || next >> |

||||

|

|

|

|

|

|